安装 Python

更新程序包并安装编译依赖环境

sudo apt update && sudo apt upgrade

sudo apt install wget build-essential libncursesw5-dev libssl-dev libsqlite3-dev tk-dev libgdbm-dev libc6-dev libbz2-dev libffi-dev zlib1g-dev下载 Python

cd ~

wget https://www.python.org/ftp/python/3.10.4/Python-3.10.4.tgz解压

tar xzf Python-3.10.4.tgz编译

cd Python-3.10.0

./configure --enable-optimizations安装

make altinstall验证安装

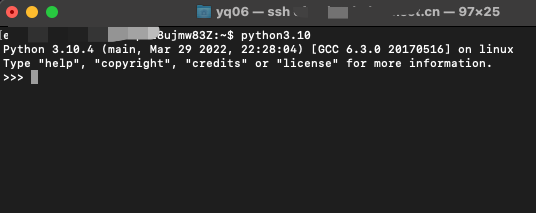

python3.10

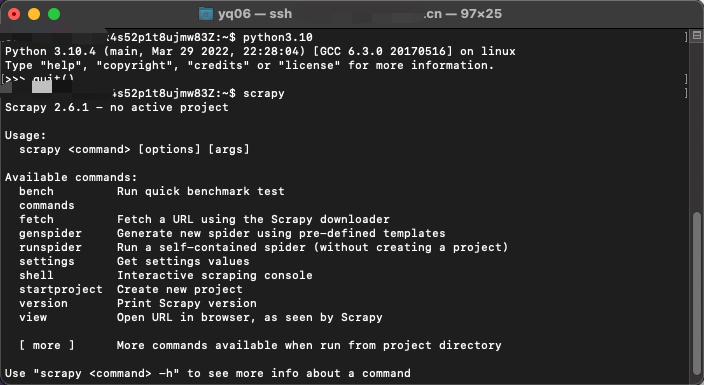

安装 Scrapy

# scrapy

pip3.10 install scrapy

# 按实际需要,安装依赖

pip3.10 install requests

pip3.10 install pillow

pip3.10 install PyMySQL

运行 Scrapy

将项目上传到服务器(省略过程),假设在 /etc/tutorial

进入项目根目录,运行

# 爬虫根目录,按实际情况

cd /etc/tutorial

# 运行

scrapy crawl quotes在这个过程中,可能会出现 ModuleNotFoundError: No module named '_lzma' 问题(查看解决方法)

定时爬取

创建 .sh 文件

# 假设放在 /etc/tutorial

cd /etc/tutorial

# 名字随便取

touch quotes.sh

sudo vim quotes.sh.sh 内容如下:(注意,scrapy 必须为绝对路径,可通过 which scrapy 查看 ,否则提示 scrapy: command not found )

#!/bin/bash

cd /etc/tutorial

/usr/local/bin/scrapy crawl zgw给予 .sh 执行权限(否则会提示 Permission denied )

chmod +x quotes.sh设置 crontab

crontab -e# 运行脚本并输出日志到 spider.log

0 14 * * * /etc/tutorial/quotes.sh > spider.log 2>&1 &